Part 3 of our four-part guide to EU AI Act compliance examines how North American organizations can navigate the Act’s requirements, including organizational roles and key obligations.

The AI Act will come into full effect in August 2026, 24 months after its official publication, although certain provisions will come into force earlier. For a detailed understanding of the AI Act’s implementation timeline, and further information about the risk-based classification of AI systems, please refer to Part 1 and Part 2 of our blog series:

Compliance with the AI Act Part 1: Timeline and important deadlines

Compliance with the AI Act Part 2: What is ‘high-risk’ activity?

Whether your organization develops AI chatbots for customer service, uses predictive algorithms for credit assessment, or deploys image recognition software, understanding your role and responsibilities under the EU AI Act is crucial for maintaining compliance when operating in European markets.

Navigating the AI Act’s global reach

Similar to the EU’s General Data Protection Regulation (GDPR), the AI Act has extra-territorial reach, making it a significant law with global implications. Its provisions apply to any organization marketing, deploying, or using an AI system that affects individuals or businesses in the EU, no matter where the system is developed or operated.

For example, if an AI system hosted in Toronto or San Francisco generates data or decisions that impact individuals or businesses in any of the EU’s 27 Member States, that system must comply with the AI Act.

The aim of the extra-territorial scope is to ensure the fundamental rights of EU residents are respected, regardless of international boundaries. This approach seeks to promote a consistent standard of ethical AI practices, encouraging all organizations to uphold high standards of accountability and transparency.

Key organizational roles under the AI Act

Compliance obligations for organizations are determined by two main factors:

- The risk level of the AI system

- The organization’s role in the supply chain

The risk classification of AI systems is detailed in Part 2 of our blog series. Therefore, let’s explore the various categories of roles organizations can play and the specific obligations associated with each.

What role does your organization play?

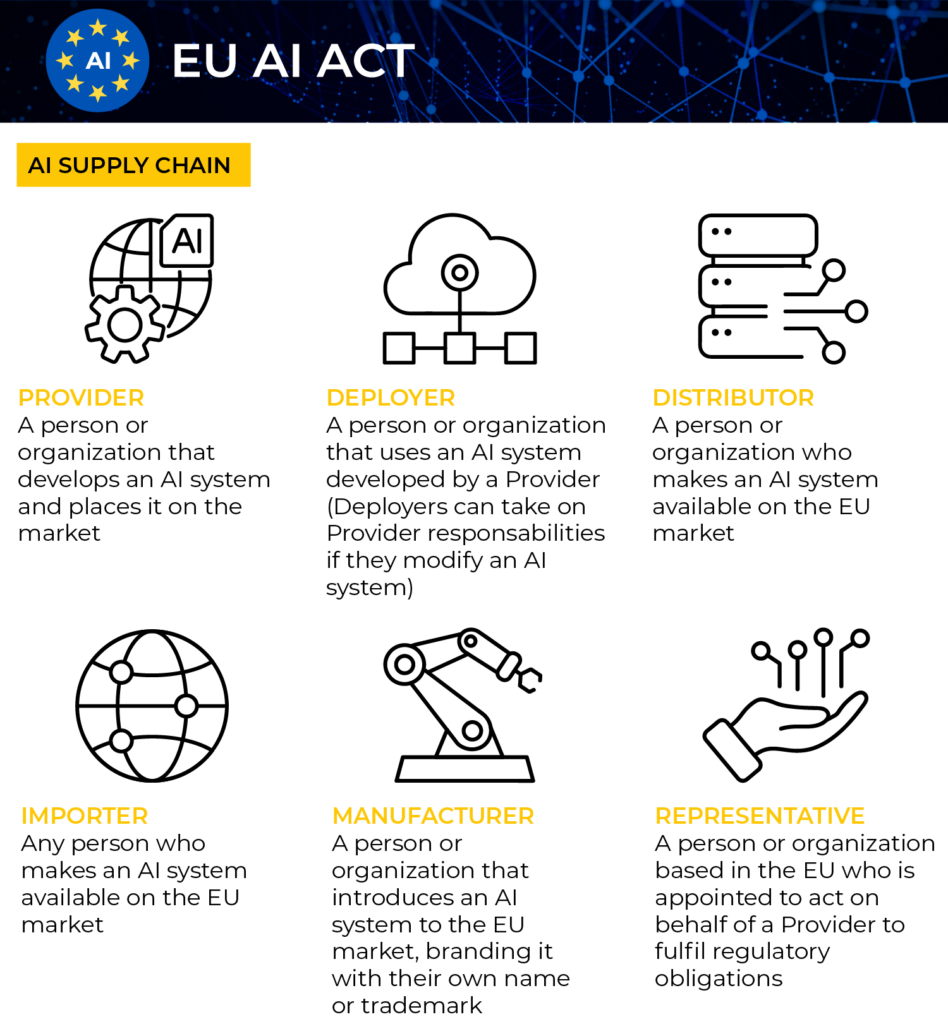

Under the AI Act, organizations fall into one of six distinct roles, each with its own set of obligations:

- Provider

An individual or organization that develops an AI system and places it on the market. Providers are responsible for ensuring their system meets the necessary requirements of the AI Act. - Deployer

An individual or organization using an AI system developed by a Provider. A Deployer’s responsibilities under the AI Act are minimal if they use the AI system without changing it. If they modify the system significantly or use it under their own name or trademark, they take on the Provider’s responsibilities, as if they were the original Provider. - Distributor

An individual or organization making an AI system available on the EU Market, acting as an intermediary between provider and user. - Importer

Any natural or legal person based in the EU who brings an AI system into the EU market from outside the EU. This role is particularly relevant for North American organizations selling AI systems to EU customers. - Product Manufacturer

An individual or organization introducing or putting into service an AI system on the EU market as part of another product and brands it with their own name or trademark. - Authorized Representative

An individual or organization based in the EU who’s been formally appointed by a Provider located outside the EU. This role is especially important for North American companies without EU offices.

Representatives are responsible for managing and fulfilling regulatory obligations and documentation required by the AI Act on behalf of Providers. This is similar to the GDPR Representative role, although documentation is more detailed and extensive. This is because the AI Act involves complex regulatory requirements for AI systems, covering a broad range of technical, operational, and safety aspects.

Provider or Deployer?

Carefully assess whether you’re a Provider or Deployer, as this will significantly affect your compliance responsibilities. It’s important to make sure how you deploy an AI system doesn’t inadvertently make you responsible as a Provider.

While most obligations fall on Providers, Deployers also have various responsibilities.

Common requirements for Providers AND Deployers

AI literacy – Providers and Deployers must ensure all staff and agents using AI systems have the appropriate knowledge. This depends on their roles and the associated risks, but is similar to mandatory data protection training under the GDPR.

Transparency – Providers and Deployers must ensure any AI system interacting with individuals (termed a ‘natural person’) meets transparency obligations, such as clearly marking content generated or manipulated by AI.

Registration – similar to data protection registration with a supervisory authority, Providers and Deployers must register the AI system in the EU’s database.

Provider-specific obligations

Because Providers design, develop, and bring AI systems to market, they bear primary responsibility for ensuring they meet safety and ethical standards. They also control the creation and operation of AI systems, so are crucial to ensuring systems meets the required standards for safety, effectiveness, and ethics.

Transparency and accountability: Two key principles of the AI Act

Providers must ensure their AI system is easy to understand, and clearly communicate its functionalities, limitations, and potential risks. This helps users know exactly what to expect and how to use it safely and effectively.

Key requirements for Providers include:

- Imposing responsibilities on importers and distributors – ensure all parties in the AI supply chain know about and adhere to their compliance standards, including completion of conformity assessments

- Establishing a risk management system – a structured process to regularly review the AI system, identifying, evaluating and mitigating any risks

- Implementing effective data governance – develop clear procedures and processes for managing training data, including ensuring diversity and establishing protocols for data handling and data protection

- Preparing technical documentation – create detailed and accessible documentation about the AI system’s design, functionality, and performance to enable user understanding BEFORE it goes on market

- Maintaining event logs – set up automatic logging systems to track the AI system’s operations and any issues that may arise

- Creating usage documentation for Deployers – provide Deployers with clear and comprehensive guides on how to use the AI system (Deployers must also maintain documentation relevant to their use of the system, if it differs)

- Establishing human oversight – design the AI system to allow for human intervention and monitoring (also impacts Deployers)

- Ensuring accuracy and robustness – confirm the AI system is reliable and resilient in its operations, and suitable for its intended purpose

- Implementing cybersecurity measures – integrate strong cybersecurity practices to protect the AI system from potential threats

- Maintaining a quality management system – establish a quality management system to oversee ongoing development of the AI system

- Addressing issues and conformity – quickly address any issues with the AI system and withdraw any systems that don’t conform or comply with compliance standards (also impacts Deployers)

- Completing documentation and assessments – complete all documentation and conformity assessments accurately, and retain for at least 10 years

- Appointing a Representative – if needed, appoint a Representative to support compliance obligations and be a point of contact between Provider and regulatory authorities, particularly relevant for North American Providers based outside the EU

- Cooperating with supervisory authorities – be ready to liaise with regulatory bodies, providing requested information and helping with inspections or audits to show compliance

- Imposing responsibilities on importers and distributors – ensure all parties in the AI supply chain know about and adhere to their compliance standards, including completion of conformity assessments

In summary

The EU AI Act is a landmark piece of legislation, setting the first global standards for the responsible development and deployment of artificial intelligence systems.

As with many new regulations, the EU’s AI legislation has sparked concerns and debates among various stakeholders, including industry associations, tech companies, and legal professionals.

Their concerns echo the initial criticisms that surrounded the introduction of the EU’s General Data Protection Regulation (GDPR). Namely, the potential difficulties for organizations and businesses in interpreting and implementing its provisions.

However, despite its complexity, the AI Act, much like the GDPR, has a structured approach that makes implementation more manageable. There are clear definitions for the six roles in the AI supply chain. Each role comes with specific compliance obligations, with the Provider role having the greatest responsibilities.

Strategic Considerations for North American Organizations

With the AI Act coming into full effect in August 2026, it’s essential North American organizations operating in EU markets familiarize themselves with the compliance obligations and how they apply.

Complying with the AI Act could serve as a market differentiator and a unique selling point that attracts clients and partners who value responsible and ethical AI practices.

If your organization would benefit from specialist data protection or AI governance advice for EU or UK markets, please contact us.

EU AI Act compliance part 4: Essential strategies for North American organizations

Coming next, in the final part of this blog series, we explore some of the best practices to guide you in meeting compliance requirements.

____________________________________________________________________________________________________________

In case you missed it…

- EU AI Act compliance part 2: Understanding ‘high-risk’ activities

- How GDPR territorial scope impacts North American businesses

- GDPR guide for SaaS companies expanding into EU & UK markets

____________________________________________________________________________________________________________

Don’t miss out on the latest data protection updates – stay informed with our fortnightly newsletter, The DPIA